My Very Own Airflow Cluster

As someone who writes a lot of one-off batch scripts, the rise of DAG (Directed Acyclic Graph) task engines has made my life a lot better. I’ve used Luigi at work for about 2 years now. For feeding the backend of this website with data, I decided to set up an Airflow cluster.

As opposed to this paradigm:

- Write a script.

- Set it up in

cron. - Check the logs whenever something goes wrong.

You get a whole lot more bang for your buck, with similar amounts of work. DAG paradigm is more like:

- Write a script.

- Write an airflow DAG, where you call that script.

- Set a schedule for the script.

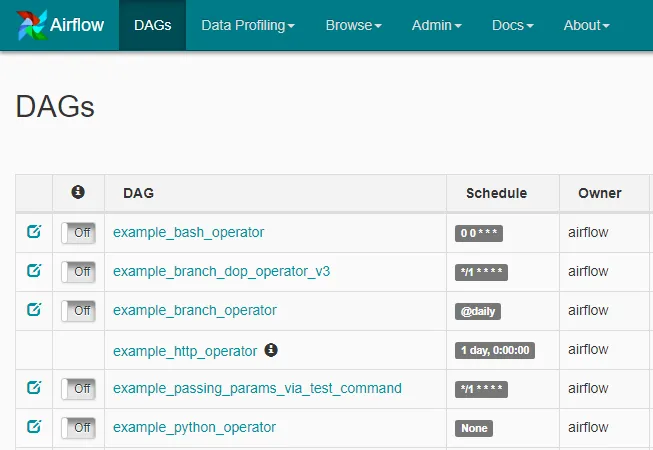

- Check on it from the built-in Web UI.

- Stop and restart tasks whenever you want to.

- View the console output from the Web UI.

It really helps certain types of batch processes scale past a certain point, while simplifying the process of managing and deploying them. You can relatively easily set up Airflow to schedule thousands of tasks without all that much more configuration past what you’d normally write.

The Good and Bad Reasons to Use Airflow

There’s a number of gotchas you should know prior to using one of the DAG engines, as they all fall into the same traps.

cronis incredibly difficult to beat for reliability. There’s a reason it’s ubiquitous. DAG engines are usually for tasks that need to scale past whatcronis built for. If you’re just trying to run a single script on the regular, stick with that instead.- DAG engines are meant for batch programs. If you need anything real-time, look elsewhere.

- Usually there’s a non-trivial amount of setup involved at the beginning.

- Sometimes scaling can be a pain. For Airflow, you’ll need to setup a task queue like Celery and a number of different nodes (web server, database, etc) once you scale past a certain point.

- There’s a relatively mature Docker image that you can reuse if you don’t want to configure it yourself.

Diving In

There are plenty of ways that you can configure Airflow. There are varying levels of complexity for varying levels of requirements, specified by the Executor types (i.e. what does what in your scenario).

- SequentialExecutor - Uses SQLite as a backend, and executes tasks for testing/debugging. This is generally what you’d run on your own machine if you’re just testing a workflow.

- LocalExecutor - Threaded local executor that uses just a DB connection as a backend.

- CeleryExecutor - For distributing tasks out to worker nodes. This uses Celery as a queue. Requires you to set up other nodes as worker nodes.

Here’s diagram of how it works.

For my purposes, I went with LocalExecutor, and set up the configuration in the outlined area above. I set up two nodes for this in my Proxmox cluster.

- Airflow web server. This was just based on a Ubuntu 16.04 instance.

- Postgres backend. For the LocalExecutor option, this is the only additional node required.

The LocalExecutor option (configured for using Postgres as a backend in this instance) has probably the highest payoff-to-effort ratio, compared to how difficult it can be to set up the CeleryExecutor. Once you’ve created the database, verified the connection works, and run airflow initdb, you’re good to go.

Now you can start making DAGs.

DAGS, Operators, Upstream, Huh?

There’s a lot of lingo to be learned when using Airflow.

- DAG - This is a single workflow, wherein you can arrange tasks and dependencies.

- Operator - This is a single unit of work that can be instantiated multiple times to achieve a particular output/goal/etc. There are things like

BashOperatorfor executing shell scripts, orPythonOperatorfor python files, etc. - DAG Run - A single execution of a DAG of tasks. Each operator in the DAG is instantiated and executed as it’s dependencies are completed.

Example DAG

Fittingly, the DAG is a graph, both directed and acyclic. Task execution flows in only one direction.

- The arrows indicate execution order.

- An arrow from task 1 to task 2 implies task 1 is a dependency for task 2. If task 1 fails, task 2 will not execute.

- Nodes can have multiple dependencies, like node 4 above.

- Nodes can have multiple downstream tasks, like nodes 1 and 3.

- Task execution timing is non-deterministic, so there’s no guarantee for whether sibling tasks 2 or 3 would execute first.

In Code

Here’s some example statements that would make a dag resembling the one in the graphic above.

default_args = {owner='airflow'} # server user account where this is run

dag = DAG(

'example', default_args=default_args)

t1 = BashOperator(task_id='t1', bash_command='echo "task 1"', dag=dag)

t2 = BashOperator(task_id='t2', bash_command='echo "task 2"', dag=dag)

t3 = BashOperator(task_id='t3', bash_command='echo "task 3"', dag=dag)

t4 = BashOperator(task_id='t4', bash_command='echo "task 4"', dag=dag)

t5 = BashOperator(task_id='t5', bash_command='echo "task 5"', dag=dag)

# now arrange the tasks in the dag

t2.set_upstream(t1)

t3.set_upstream(t1)

t4.set_upstream(t2)

t4.set_upstream(t3)

t5.set_upstream(t3)Now that this is defined, you can instantiate a DAG Run from the webserver.

Notes On Running Airflow

There have been a lot of justifiable claims against Airflow’s deployment story; it can be very frustrating. Scaling Airflow is confusing and fault-prone. For those who don’t want to deal with the headache of managing the installation on their own, Google offers a hosted Airflow services called Cloud Composer, or you can look into alternatives like Luigi or Mara. While I don’t fully love Airflow, I still haven’t found something better.

Headaches aside, it’s still worth using a DAG engine because of the great improvements in both the quality and visibility of batch processing jobs.

Features to Avoid

Since Airflow has been in the Apache Incubator for the past year or so, it’s been getting a lot of features rapidly. I choose to avoid these ones:

- X-coms. The biggest downside of Airflow compared to Luigi is that it doesn’t force you to write idempotent tasks in the same way. It’s easy to accidentally end up writing something that has outputs which don’t reproduce. If you treat a task like a function with a single input and output, it works best.

- Branching Operators - If you need to choose between multiple sets of logic, it’s best just to dynamically set your task dependencies at runtime, as opposed to creating a branching task.

- Bitshift Operators as Upstream/Downstream - Airflow recently introduced a way of setting upstream/downstream tasks by using bitshift operators like

<<or>>. This is bad. Explicit is good!set_upstream()is not particularly verbose.

In Conclusion

If you’re frustrated with cron or other simple ways of deploying batch processes, give it a shot. Be aware of the shortcomings, though.